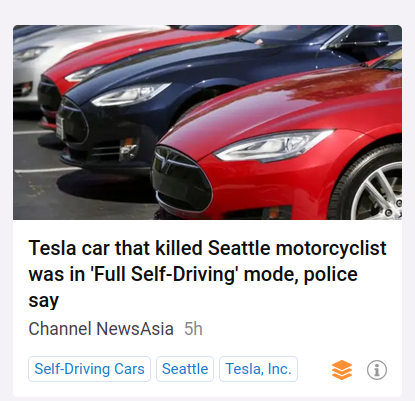

Inside the WSJ’s Investigation of Tesla’s Autopilot Crash Risks

Cars using Autopilot sometimes struggle to recognize obstacles or stay on the road and Tesla covers the safety defects up!!!!

To understand safety issues surrounding Tesla’s TSLA 4.05%increase; green up pointing triangle

Autopilot, The Wall Street Journal undertook a comprehensive analysis of crash data, uncovering previously obscured details by combining heavily redacted federal filings with local police records.

The Journal analyzed filings that manufacturers are required to submit to the National Highway Traffic Safety Administration when cars crash while using autonomous driving technology. However, the public versions of these reports are heavily redacted, obscuring key details like the crash narrative and even the exact date.

To gain a clearer picture, the Journal filed public records requests for police crash databases with agencies in more than a dozen states. Reporters spent months compiling these records into a unified database and matching them with corresponding NHTSA submissions. This process allowed the Journal to learn details about more than 200 Tesla Autopilot-involved crashes, revealing previously unknowable patterns by delving into the detailed narratives and diagrams in police reports.

Here are some of the key findings:

Autopilot struggles with obstacles

While many crashes involved incidental collisions, a number occurred when Teslas on Autopilot struck clearly visible obstacles or ran off the road at T-intersections, suggesting challenges with the system’s ability to navigate these scenarios. In several crashes, the obstacles included one or more police or fire vehicles with emergency lights flashing.

Autopilot relies mostly on software processing images from onboard cameras to detect obstacles, although some models use a radar backup. Other vehicle manufacturers use cameras but also radar and lasers to detect obstacles directly. Elon Musk, Tesla’s chief executive, has said such sensors are unnecessary. Tesla says that drivers are instructed that while Autopilot is engaged, they must keep their hands on the steering wheel and remain ready to retake control at all times.

Autopilot crashes also involve veering

The Journal also found a number of crashes occurred when Autopilot was engaged and the vehicle lost traction, including cases on wet pavement, veering off the road. Tesla says that such cases may occur when “environmental factors contribute to the crash, such as with heavy rains and hydroplaning.”

NHTSA findings echo WSJ analysis

The Journal’s findings align with a recently released NHTSA analysis that identified a trend of “avoidable crashes” involving Teslas striking visible obstacles or running off wet roads after losing traction. Tesla says it disagreed with NHTSA’s analysis and pointed out that the agency didn’t seek a recall. Instead, Tesla says it voluntarily issued a software update for up to two million vehicles last year that would improve driver warnings and attentiveness when Autopilot was in operation.

Tesla and NHTSA withhold information from the public

Despite NHTSA guidelines encouraging transparency, both Tesla and the agency itself withhold key information from the public about crashes. Tesla blocks the release of descriptive details about its reported crashes, saying those details amount to confidential business information. NHTSA, meanwhile, cites its obligation to protect personal privacy under federal law as a justification for withholding specific information such as the exact date, address, zip code and location of the crash.

Tesla has reported over 1,200 Autopilot-related crashes to NHTSA since 2021

These incidents represent about 80% of all crashes reported to the regulator involving autonomous driving technology. The Journal matched 222 of these crashes to local police reports. Tesla attributes its crash count in NHTSA’s data to the widespread use of its vehicles and Autopilot technology, as well as its superior crash detection and reporting systems. The company maintains that “Autopilot has been used by millions of drivers to safely drive billions of miles” and has prevented “innumerable” crashes.

Tesla tightly controls access to crash data

Teslas store video and operating data that can be transmitted to the company’s servers. Owners can review their own vehicle’s video on its dashboard. And Tesla says that it lets owners download logs of operating data that include hundreds of readings and conditions that are time stamped to the millisecond. However, getting full details of what the cameras record and how they process those images to make Autopilot work requires expert access to the vehicle’s computer. Tesla says it shares information when required by police or in lawsuits.

SHARE YOUR THOUGHTS

Would you feel comfortable riding in a Tesla on Autopilot? Why or why not? Join the conversation below.

John West contributed to this article.

Write to Paul Overberg at paul.overberg@wsj.com, Emma Scott at emma.scott@wsj.com and Frank Matt at francis.matt@wsj.com